The Black Sea AI Gigafactory and Romania’s Contribution to Europe’s AI Agenda

Europe is rapidly advancing the development of a continent-wide AI infrastructure, and the numbers clearly reflect the scale of this ambition. Following the launch of the AI Innovation Package in 2024, which expanded EuroHPC’s role to full support for AI model development, the EU has already established 13 AI Factories. In 2025, the Commission raised the stakes through InvestAI, a plan aimed at mobilizing EUR 200 billion (mostly private investment), of which up to EUR 20 billion represents the public contribution dedicated to gigafactories.

Together with the Apply AI strategy, Europe is approaching an ecosystem of at least 17 publicly or public-privately funded AI (giga)factories, on top of which private projects are emerging. NVIDIA alone has recently announced a roadmap for building 20 AI factories in Europe, including 5 gigafactories — bringing the company’s active projects on the continent to 22, with another 15 planned by 2030, according to the Centre for European Policy Studies (CEPS). (See the full list of Nvidia’s projects in Europe here.)

At the local level, at the end of November, the Romanian government approved the memorandum mandating the Ministry of Energy and the Ministry of Finance to coordinate the implementation of the Black Sea AI Gigafactory project — an AI and supercomputing hub worth up to EUR 5 billion. The competition for European funding is open, and Romanian authorities are already preparing the official proposal to be submitted in March 2026.

What better moment to revisit the premises of the AI Gigafactory program and assess Romania’s plans and chances?

From AI Factories to AI Gigafactories: Objectives, Funding, and Perspectives

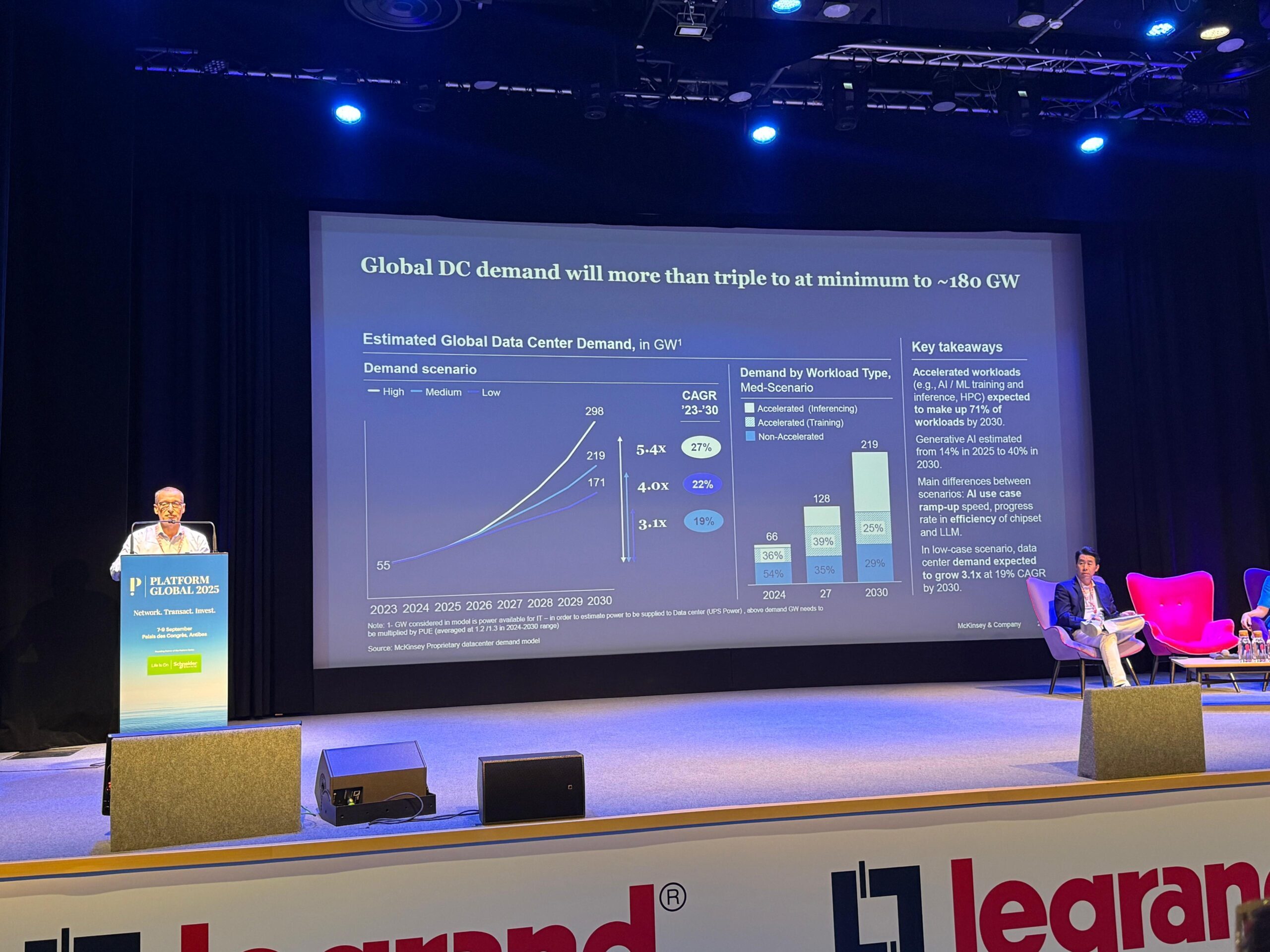

In February 2025, we reported that the President of the European Commission, Ursula von der Leyen, launched InvestAI, the initiative that includes a dedicated EUR 20 billion fund for building AI Gigafactories (AIGFs). The program aims to develop at least five such “gigafactories” — massive compute infrastructures conceived as an extension of the AI Factories program, capable of training models with hundreds of trillions of parameters by integrating over 100,000 AI chips and highly efficient, automated data centers.

Through InvestAI, together with contributions from member states, the EU seeks to provide researchers, startups, and industry with access to large-scale computing capacity, accelerating the development of advanced AI models and strengthening Europe’s technological sovereignty.

The estimated cost of an AI Gigafactory is EUR 3–5 billion, with funding shared between the public and private sectors. More specifically, public contributions can cover up to 35% of CAPEX, financed by the European Commission and the member states, while the remaining investment and 100% of OPEX come from private companies and investment funds. At the European level, funding dedicated to AI Gigafactories is therefore distributed as follows: 17% from the European Commission, 17% from EU member states, and 66% from the private sector.

The AI Gigafactories program is the natural extension of the AI Factories initiative , funded through EuroHPC with nearly EUR 2 billion. AI Gigafactories will build on this model but at a far larger industrial scale, creating the infrastructure needed for training advanced AI models.

- Learn more about RO AI Factory, Romania’s first AI Factory: “Interview with Victor Vevera, ICI Bucharest: What resources and services will RO AI Factory provide, and when will it become operational?”

The Countries Entering the Race for AI Gigafactories. What Comes Next?

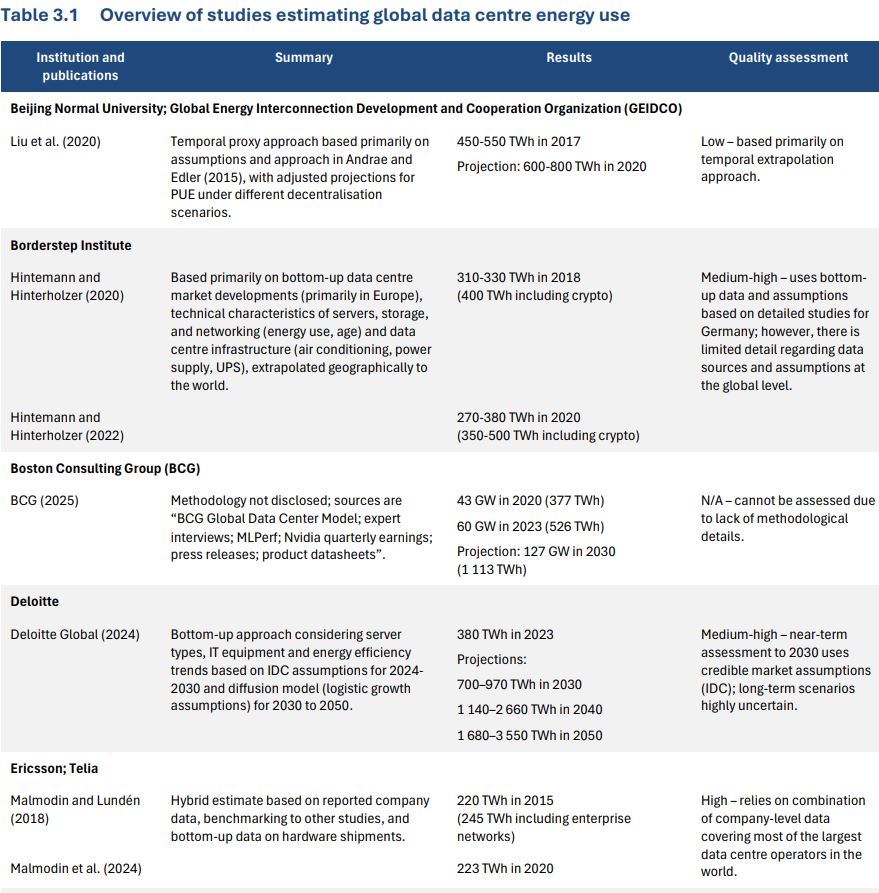

In 2024–2025, the European Commission launched a “call for expressions of interest” to assess market readiness. A total of 76 proposals were submitted, ( covering 60 sites across 16 member states, indicating total intended investments of more than EUR 230 billion. Given the scale and complexity of the submissions, the official call for AI Gigafactories (AIGFs) has recently been postponed to 2026, instead of late 2025 as originally planned.

Although the European Commission has not officially disclosed the member states that submitted expressions of interest by the 20 June 2025 deadline—citing confidentiality reasons—several countries have announced their participation publicly. Among them are Romania, Austria (Vienna), Czechia (Prague), Spain (Mora la Nova), as well as Germany and the Netherlands, according to Data Center Dynamics.

Respondents to the call include data center operators, telecom companies, energy providers, European and global technology partners, as well as investors. According to the Commission, collectively, respondents expect to procure at least three million GPUs.

The call was launched to assess interest and create an informal registry of candidates. Between December 2025 and early 2026, the European Commission will engage with respondents to refine the proposals: technical details, location, specifications, funding structure, and sustainability plans.

- On 4 December 2025, the European Commission signed a Memorandum of Understanding (MoU) with the European Investment Bank and the European Investment Fund to support the development and financing of AI Gigafactories.

- What’s next? The official call for proposals will be launched in early 2026 (estimated submission deadline: March 2026).

- AI Gigafactories are expected to become operational by the end of 2028.

What Are Romania’s Plans for the Black Sea AI Gigafactory?

The Black Sea AI Gigafactory project proposes installing more than 100,000 AI accelerators in two stages: Phase I in Cernavodă and Phase II in Doicești, locations chosen for their energy advantages and digital infrastructure. Powered by up to 1,500 MW of zero-emission energy, primarily nuclear, the gigafactory would position Romania as a strategic supercomputing hub in Europe.

Cernavodă provides direct access to nuclear power, along with excellent fiber connectivity and submarine cable links, while Doicești offers the advantage of an “industrial site with potential for SMR co-location, hybrid cooling, and integration into the national high-speed communications network” (source). The project also includes a strong cybersecurity component, supported by the expertise built around the presence of the European Cybersecurity Competence Centre (ECCC) in Bucharest.

The memorandum mentioned at the beginning of this article—approved by the government on 27 November—places the Ministry of Energy and the Ministry of Finance in charge, with support from the Authority for Digitalization of Romania, to coordinate preparation, negotiations, and the involvement of all key public, private, academic, and research stakeholders to bring and implement the Black Sea AI Gigafactory in Romania.

What else does the memorandum reveal?

- The Ministry of Energy becomes the main coordinator of the project and assumes primary responsibility for data center policy, leading the development and oversight of strategies in this field.

- Romania could attract additional funding through European instruments such as the European Innovation Council Fund, TechEU Scale-up, the EIB Group’s “European Tech Champions Initiative,” or the InvestEU.

- The gigafactory will integrate advanced energy-efficiency technologies—from sustainable cooling and renewable energy via PPAs to heat reuse and grid-resilience measures—to meet national and EU climate objectives.

- Selection criteria also include responsible water usage and the adoption of circular-economy principles.

- Romania is running a major investment program in nuclear energy, including the refurbishment of Unit 1 at Cernavodă (extending its lifetime by another 30 years), the construction of Units 3 and 4, and the development of advanced nuclear technologies such as the NuScale SMR at Doicești.

- The gigafactory will boost competitiveness by providing advanced compute capacity to industry, startups, SMEs, and researchers; create jobs; develop new skills; and, through new cross-border fiber corridors and trusted compute services, strengthen regional connectivity—complementing EuroHPC infrastructure and supporting interoperable collaboration between countries.

We are encouraged that, regardless of the European Commission’s decision after the March 2026 stage, Romania does not plan to pause the project. On the contrary: in the event of a negative response, authorities are prepared to recalibrate the plan, attract investors, and move forward with implementation, with technical support from the World Bank Group and other international financial institutions. Romania is not stepping back from its ambition to build a regional AI hub — and that may be the best industry news as we enter 2026!